In the video game industry, making game assets is a major time investment, sometimes more than coding itself.

The latest renderers use a realistic model called PBR. For each material, the artist must specify values, such as its color, metalness, and roughness.

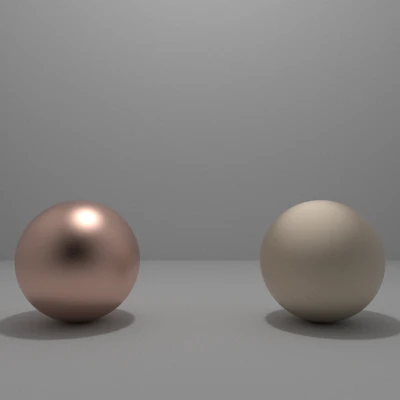

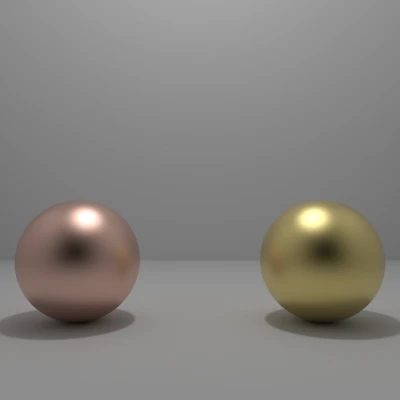

The left-hand sphere is smooth, the right-hand one is rough. They have the same colour. You can move the camera!

Finding the correct values by hand requires a lot of trials and errors. Here is an apple with random PBR values:

After some tweaking to find the correct values:

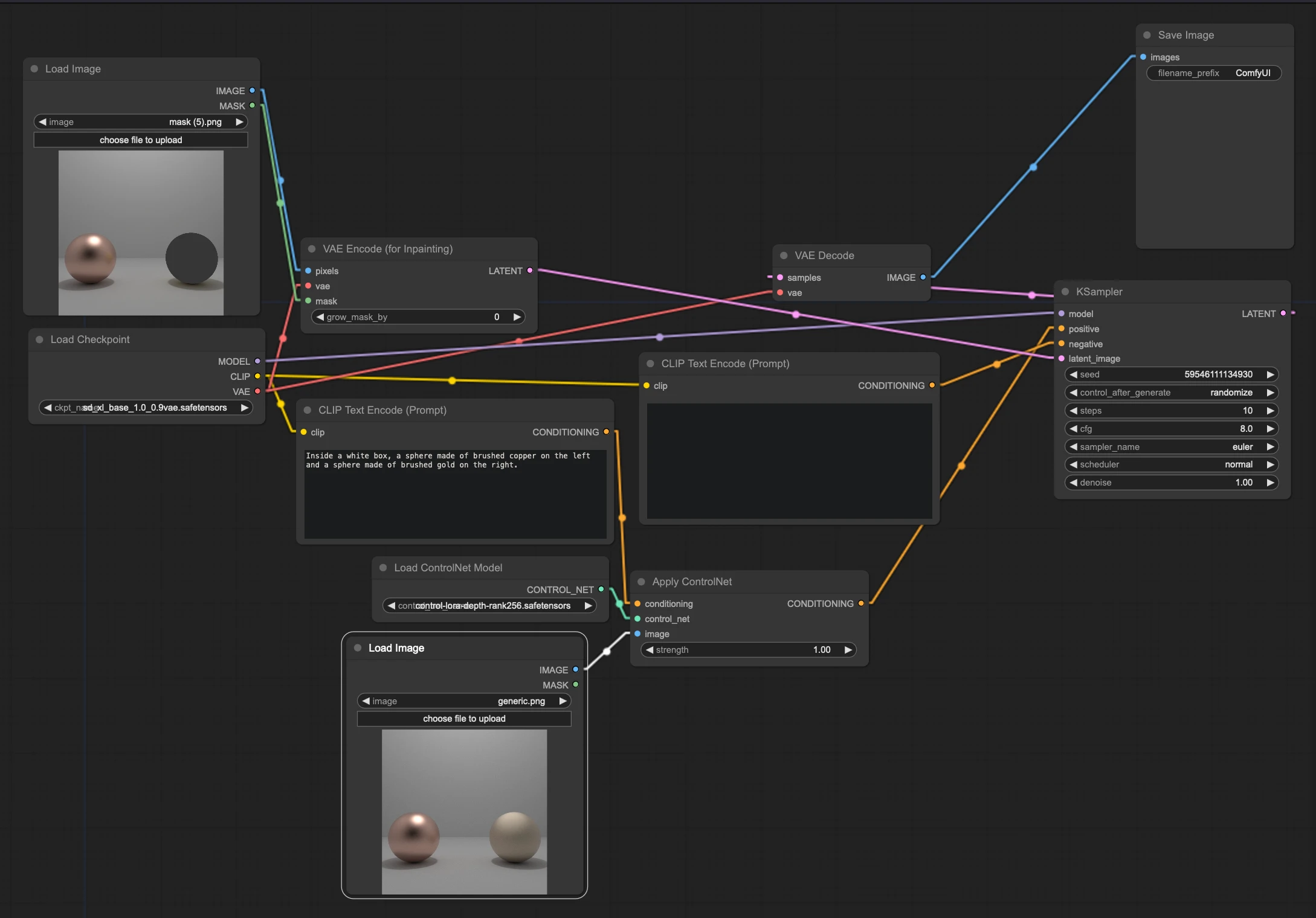

More natural-looking, is it not? Unfortunately, while there are free sources of PBR materials, one has to be ready to invest time/money for precise materials. Let us see how we can automate that. Our plan:

- Differential renderingRender a scene with an initialised material

- Generative AIReplace the material in the render with one we want

- Inverse renderingGet the PBR values

Renderers transform a 3D scene into a 2D picture. Mathematically, they are a function. Through automatic differentiation, we can deduce a new function, which would tell us how the output would change, given a change in the input. And more relevantly for us, we can, given a particular picture, get the original scene, including its PBR values.

For our scene, I put two spheres in a box: one made of copper, the other made of a generic material. Here is the render made with ☘️Mitsuba:

If you have a scene in their ☘️well-documented XML format, rendering is simple:

Generative AIThe next step is to replace the generic material with the one we want (gold in our case study). Stable Diffusion is quite good for generating realistic materials. ControlNet, an extension, helps SD keep the 3D shapes. I masked the generic sphere, and asked SD to replace it with gold:

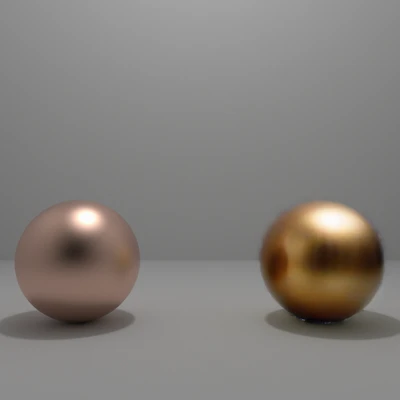

Here is the result:

The lighting is far from correct, but the material itself is recognisable: gold!

Inverse renderingThe initial scene had the following parameters:

eta and alpha influence respectively the colour and roughness of the material.

Using gradient descent (similar to the ☘️Mitsuba inverse-rendering tutorial), we get the following values:

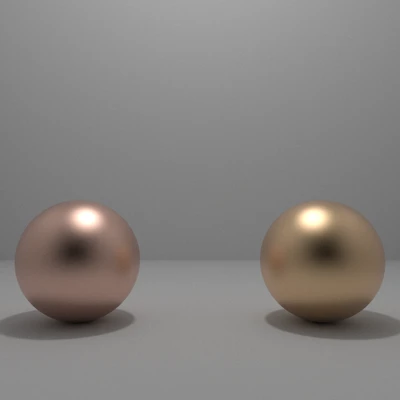

If we used the same roughness as the left-hand ball in a render:

Quite credible! For comparison, here is a physically-correct gold material:

A bit greener than expected, is it not? Contrary to physical realism, our approach favours psychological realism, as Stable Diffusion is influenced by the “idea” of gold used by artists.